SSD CACHING

There are several ways that caching has been utilized in storage systems to improve and balance performance in high demanding environments. Methods which use RAM for caching databases, and others that use SSDs, might specifically ignore larger files typically used in media environments. Caching to memory is more expensive and less scalable than using SSDs because motherboard RAM slots are limited. Due to the larger file nature of multi-media environments, using SSDs for cache is more economical way of providing capacity and performance.

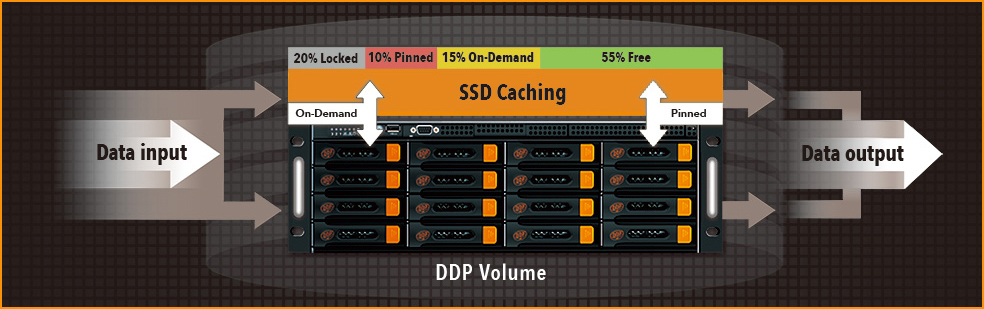

One of the more popular methods of caching is the 'On Demand' method. This uses a process whereby the cache is filled by the most frequently accessed files, whereas the least used data is pushed out of cache. The problem with this method is that we don't usually know what exactly is in the cache, and we generally don't have much control over the process. It makes sense then that the general rule for storage manufactures would be ‘the bigger the cache, the better’, however this will add substantial cost, pushing up the price of the storage server.

We have developed our own, uniquely ‘DDP’ way of doing it. In addition to the traditional On Demand method, we have introduced the 'Pinned' method. With Pinned Caching, an operator selects folders or Folder Volumes in their DDP, and specifies where the files to be cached are located. Next, data from HDDs is copied internally to the DDP Cache and it stays there until deleted. The operator has full control over the Cache and decides what files to cache and when.

For example, when a very high transfer rate video such as a DPX Uncompressed sequence needs to be played, and/or when lots of rendering and transcoding process need to be completed, they can be Pinned to cache for ultimate performance. All of these processes require lots of seek time on the regular HDDs, but because SSD drives do not require any seek time, this operation is flawlessly efficient - no matter how many clients are connected or how many files need to be rendered or transcoded.

DDP's Pinned Cache process can also be fully automated on the DDP. For example, once a task is completed and stored to cache, it can initiate an internal application which would copy the data to HDDs.

DDP's Pinned Cache is permanent; it is guaranteed to always be there - even in the event of power failure or reboot of the DDP. As industry workflows are more and more file-based ingests with lots of transcoding and background processes, being able to control and remain aware of what is being cached will enable all DDP clients to make use of this innovative technology.

Another unique advantage of DDPs Caching methods is that different algorithms can be partitioned within the available SSD Drive Group. In addition to the Pinned and On-Demand methods, DDP also enables you to Lock a percentage of the SSD Drive Group to act as primary storage.

LOAD BALANCING

Depending on how storage is architected, just adding additional spindles won't necessarily increase performance for all volumes. With DDP, volumes are now Load Balanced across the storage. For example, in a 16 Disk system, instead of a 16 Disk RAID 6 where all the drive heads have to seek together to find files, or instead of two separate RAID 5 volumes with disparate files and directory paths, DDP can load balance any volume across two 8 Disk RAID5/6. In this way every volume created on the SAN can benefit from a balanced load across all the disks.

Load Balancing alters which underlying RAIDs, or Data Locations (DLs), that files are written and read from. For example, with a 24 frame image sequence, the odd numbered frames would be stored on Data Location 01, and the even numbered frames would be stored on Data Location 02, thus decreasing latency in seeking and playing the files. With a batch of .MXF, .MOV, .etc files, every other file would be written/read from DL01 and DL02 and so on. The balancing of files happens at a lower level of the storage, transparent to the user. To the user this all appears as a single volume, with all files stored in their chosen folder hierarchies.

With Load Balancing, the more disks and RAID groups you add, the faster the storage becomes.

With DDP OS V4 and V5, the metadata is completely separated from the data. It is a small footprint that can be replicated, but the data itself remains on the DDP. When the data is not load-balanced then the ‘Folder Volumes’ (addressing the other two DDPs) can be used. When load-balanced only, the files on the other two DDPs can be used until the broken one has been repaired or replaced.

The upcoming V5 allows multiple DDPs on the same network to stream (and I/O) data in parallel, controlled by one master DDP which holds the metadata. The master DDP tells all desktops connected to the network where to write or read the data to and from.